🔥 NEW YEAR SALE! Use Promo Code NY2026 and get 55% OFF.

ALL COURSES

CATEGORIES

Scrum Fundamentals Certified (SFC) | SCRUMstudyScrum Developer Certified (SDC) | SCRUMstudyScrum Master Certified (SMC) | SCRUMstudySCRUMstudy Agile Master Certified (SAMC)Scrum Product Owner Certified (SPOC) | SCRUMstudyScaled Scrum Master Certified (SSMC) | SCRUMstudyScaled Scrum Product Owner Certified (SSPOC) | SCRUMstudyExpert Scrum Master Certified (ESMC) | SCRUMstudyScrum for Operations & DevOps Fundamentals (SODFC) | SCRUMstudyScrum for Operations & DevOps Experts (SODEC) | SCRUMstudySCRUMstudy Recertification

Certified ScrumMaster (CSM) | Scrum Alliance®Advanced Certified ScrumMaster (A-CSM) | Scrum Alliance®Certified Scrum Product Owner (CSPO) | Scrum Alliance®Advanced Certified Scrum Product Owner (A-CSPO) | Scrum Alliance®PMI-ACP® Certification

Certified ScrumMaster (CSM) | Scrum Alliance®Advanced Certified ScrumMaster (A-CSM) | Scrum Alliance®Certified Scrum Product Owner (CSPO) | Scrum Alliance®Advanced Certified Scrum Product Owner (A-CSPO) | Scrum Alliance®PMI-ACP® Certification

Six Sigma Green Belt (SSGB)Lean Six Sigma Green Belt (LSSGB)Six Sigma Black Belt (SSBB)Lean Six Sigma Black Belt (LSSBB)

6sigmastudy Recertification

6sigmastudy Recertification

Big Data HadoopHadoop AdministrationApache Spark and ScalaApache CassandraMongoDB®ELK StackTalend for Data Integration and Big DataApache Kafka

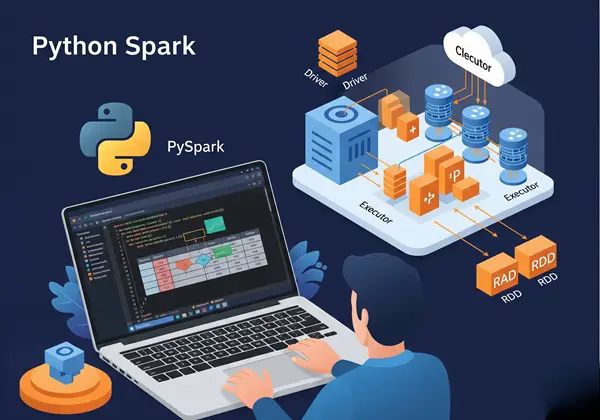

Data Science Using RData Analytics with RMicrosoft Power BI with Gen AITableauPython for Data SciencePython ProgrammingPython Spark using PySparkMachine Learning using PythonQlikViewInformatica

AWS ArchitectAWS DevelopmentAWS SysOps AdministratorGoogle Cloud PlatformMicrosoft Azure AdministratorMicrosoft Azure ArchitectMicrosoft Azure Developer AssociateMicrosoft Azure DevOpsSalesforce Administrator and App BuilderSalesforce Platform Developer 1 Certification

Agentic AI CertificationAdvanced Artificial IntelligenceGraphical ModelsMachine Learning OperationsMachine Learning with MahoutPrompt Engineering Certification Training with LLMReinforcement LearningDeep Learning with TensorFlow 2.0Natural Language Processing with Python